TimeXtender or Microsoft: which fits your organization better?

When it comes to data management, there are tons of solutions in the market. This makes the choice of the right fit in your organization a challenge. In this blog, we compare and contrast the two most used stacks in the Netherlands right now; the Microsoft Stack and Timextender.

Choosing the right solution

The choice between relying solely on the Microsoft stack or integrating TimeXtender is like stepping into a tailor-made suit shop. As a decision maker, you are in the process of selecting the ideal piece that perfectly aligns with your organization’s unique needs. Each option represents a different attire, and, like designing a suit, it’s about crafting a data strategy that seamlessly suits your goals. Here, you can explore the options against the backdrop of a changing data landscape and the quest for future-proof solutions, helping you create a data management strategy that’s tailor-made for your success.

To first understand the options available, you need to be aware of the differences, similarities, and capabilities of each tool.

Microsoft Fabric

What is it?

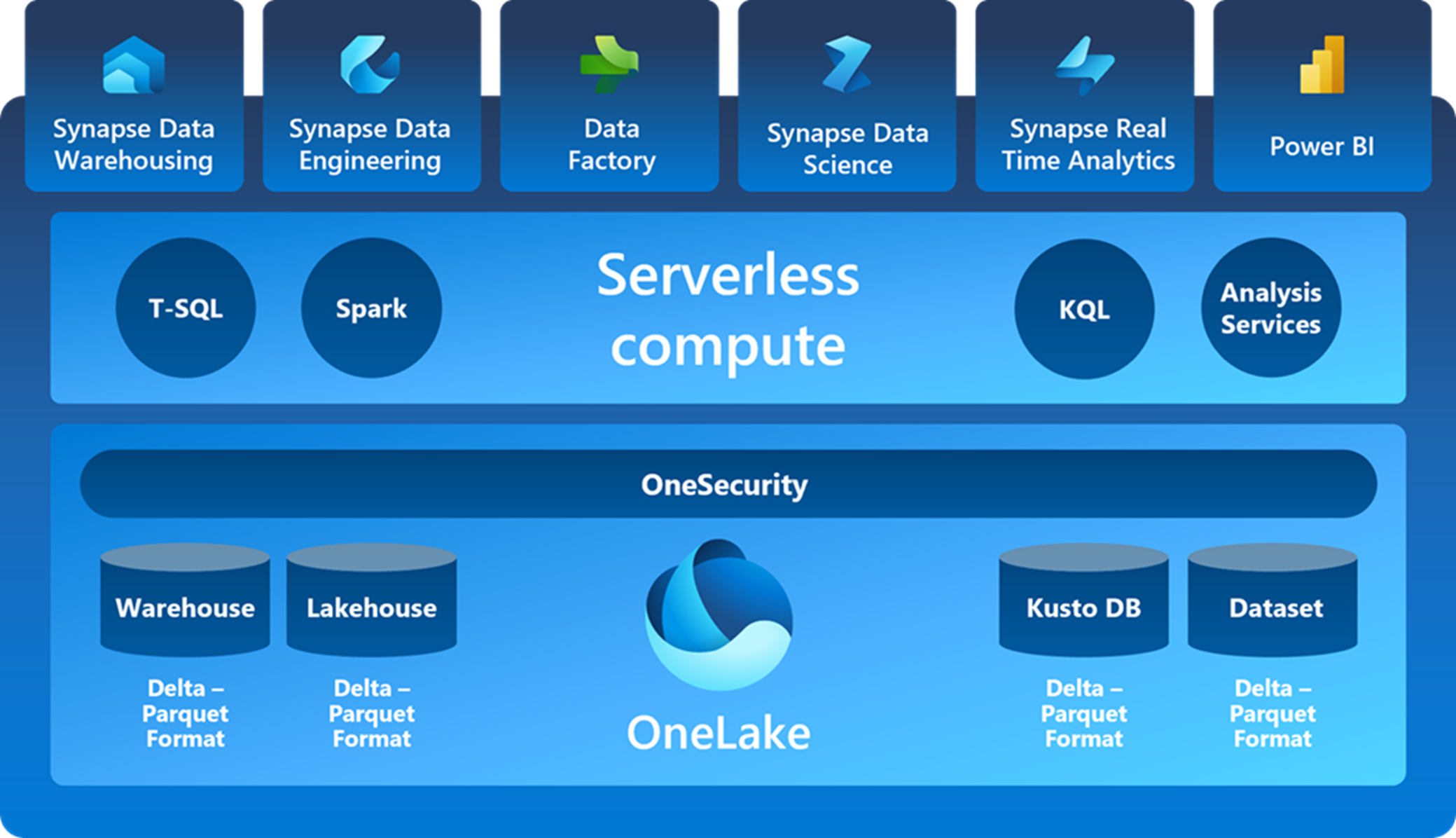

Considered as a Software as a Service (SaaS), Fabric is designed to remove the complexity of integrating all data activities within an organization. By standardizing the storage of data and combining Data Warehousing, Data Engineering, Data Factory, Data Science, Real Time Analytics and Power BI, collaboration between teams/members is seamless and easier than ever before. This also comes with unified governance principles and computing resource purchases, making them more efficient for your organization. For a deeper dive into its different propositions, you can refer to our other blog on Microsoft Fabric, but for now we will actively look into what Microsoft has to offer when it comes to data management.

Data management in Microsoft Fabric

Microsoft offers a comprehensive set of tools and services for data engineering, which is a crucial part of the data lifecycle that involves collecting, processing, and preparing data for analysis and consumption. Microsoft’s data engineering proposition is centered around its Azure cloud platform and a wide range of products and services designed to help organizations manage their data effectively. Among Microsoft key functionalities for data management, we can find:

- Azure Data Factory to create, schedule, and manage data pipelines.

- Azure Databricks for big data processing, data exploration, and machine learning.

- Azure Synapse Analytics that combines enterprise data warehousing and big data analytics.

- Azure Stream Analytics that allows real-time data ingestion and processing.

- Azure Data Lake Storage, which is a scalable and secure data lake solution for storing large amounts of structured and unstructured data.

- Azure SQL Database offers managed relational database services that can be used for structured data storage and processing.

- Azure Logic Apps and Azure Function; serverless computing services can be used to build data processing workflows and automation.

TimeXtender

What is it?

TimeXtender serves as a low-code software platform with a primary focus on simplifying and automating the intricate steps involved in data integration, modeling, and preparation for analytical purposes. Its core mission revolves around streamlining the often-complex process of extracting, transforming, and loading data from diverse sources into a centralized data warehouse. By doing so, it empowers organizations to effortlessly access and analyze their data, thereby facilitating data-driven decision-making with ease and efficiency.

Where TimeXtender can play a part

TimeXtender is not a direct substitute for all of Microsoft’s offerings. However, it presents several capabilities that can complement Microsoft’s data engineering features and bring value and potentially substitute certain capabilities:

- Integration: Similar to Azure Data Factory. It can extract data from various sources, transform and load it into data warehouses, data marts, or data lakes.

- Metadata Management: Allows you to document, track, and manage data lineage, transformations, and dependencies.

- Data Transformation: TimeXtender can replace some of the functionality provided by services like Azure Data Factory, Azure Databricks, or Azure Data Lake Analytics for ETL (Extract, Transform, Load) tasks.

- Automation: Throughout the data integration process, similar to what Azure Logic Apps and Azure Functions offers for workflow orchestration and event-driven data processing.

- Data Warehousing: Used to create and manage data warehouses, making it a potential alternative to Azure Synapse Analytics for smaller-scale data warehousing needs.

However, there are several areas where TimeXtender may not provide a direct substitute:

- Real-time Data Processing: Azure Stream Analytics is designed for real-time data processing and event-driven architectures, which is not a primary focus of TimeXtender.

- Big Data Processing: TimeXtender is not specifically designed for big data processing at scale, as is the case with Azure HDInsight. It’s better suited for traditional data integration and warehousing scenarios.

- Serverless Computing: Services like Azure Functions are used for serverless computing and custom data processing tasks triggered by events. TimeXtender doesn’t provide serverless computing capabilities.

- Data Lake Storage: Azure Data Lake Storage is optimized for large-scale, unstructured data storage, which is not the primary function of TimeXtender. TimeXtender is more focused on data integration and automation.

What is the right fit for you?

Using only the Microsoft Stack:

If your organization is heavily invested in the Azure ecosystem, including Azure SQL Data Warehouse, Azure Data Lake Storage, Azure Data Factory, and other Azure services, it may make sense to leverage the full suite of Microsoft tools. Also, if your data engineering team is already well-versed in Microsoft technologies and lacks experience with third-party tools like TimeXtender, sticking with the Microsoft stack can be more straightforward and cost-effective in terms of training and skill development.

Moreover, using only Microsoft services can simplify your cost management, as you’ll have a single billing platform (Azure) for all your data-related expenditures. This can make it easier to monitor and optimize your cloud costs.

Integrating TimeXtender:

If your data integration requirements are complex and involve a wide range of data sources, formats, and transformations, TimeXtender’s user-friendly interface and automation capabilities can simplify the process and reduce development time. It also excels in metadata management, making it an excellent choice if you need strong data governance, lineage tracking, and documentation of data transformations.

If your organization operates in a hybrid cloud or multi-cloud environment, where you use a combination of cloud providers or on-premises data sources, its flexibility can help bridge the gap and provide an integration in a low-code solution for a wider set of users without a necessary background in data engineering.

In many cases, organizations opt for a combination of both approaches. They use Microsoft’s native services for certain tasks and integrate TimeXtender where it adds value, such as for data integration, metadata management, and rapid development. Ultimately, the choice should align with your organization’s unique needs, skillsets, and long-term data strategy. Just like with your local tailor, it’s important to evaluate the possibilities with experienced consultants and assess how each option fits into your overall data engineering architecture. This way you can obtain the most optimal result for your business.

Webinar: How to standardise reporting with IBCS?

When your company produces dozens of monthly (management) reports, dashboards and ad hoc analyses, chances are that these reports and dashboards all look slightly different. In other sectors, having standardized communication is commonplace. The International Business Communication Standards (IBCS) standardizes the language in dashboards and reports. But what does it mean to standardize reporting language? In 20 minutes, our expert will tell you everything you need to know.

We have been working with the International Business Communication Standards (IBCS) for years. This standard unifies the language of reports and dashboards so that business communication becomes more effective. A study done by the Technische Universität München (TUM) shows that with correct use of IBCS®, analysis speed increases by 46% and decision accuracy increases by 61%.

But what is IBCS all about? And how can you implement these standards within your organization? In 20 minutes, our expert explains all the details of how IBCS can help your organisation.

Fill out this form to watch the webinar!

You can now watch the webinar!

How do you optimize the Order to Cash process?

Every finance manager knows that the Order to Cash (O2C) process is the lifeblood of any organization. Since it's the journey every customer goes through, from the time an order is placed to the time payment is processed, a smooth, efficient and error-free O2C process is crucial to an organization's financial success and customer satisfaction. But how can we ensure and improve the efficiency of this complex process? The answer lies in Process Mining.

What is Process Mining?

Process Mining is a data analysis technique that aims to discover, monitor and improve real processes by extracting knowledge from event logs, which record the execution of processes in information systems.

What is the impact of Process Mining on the O2C process?

Implementing Process Mining within the O2C process can yield significant benefits. McKinsey research shows that companies that implement Process Mining can achieve up to 20% more efficiency in their O2C process. This means faster order turnaround, fewer errors and delays, and ultimately happier customers. In addition, using Process Mining can lead to a 10-20% improvement in operational efficiency, according to the company.

But Process Mining provides even more optimization. Another study conducted by Gartner showed that using Process Mining can lead to a 20-30% reduction in order-to-cash process lead time.

In addition, companies can significantly reduce their Days Sales Outstanding (DSO). Research by Gartner shows that with Process Mining, companies can reduce their DSO by an average of 15%, which has a direct impact on cash flow.

Process Mining offers opportunities for finance managers

For finance managers, Process Mining offers a gold mine of insights and opportunities. With real-time insight into the O2C process, finance managers can make data-driven decisions, manage risk and deploy their team more efficiently.

This is obviously hugely important at a time when digitization, automation and data analytics are key to competitive advantage. In this regard, Process Mining is an essential tool for any finance manager looking to move their organization forward.

Rockfeather and Process Mining

At Rockfeather, we understand the importance of efficient business processes. With our expertise in Process Mining, we help organizations optimize their O2C process, reduce costs and increase competitiveness. Contact us to find out how we can help you take your O2C process to the next level.

More posts?

Webinar: External Tools to Optimize your Power BI Environment

Whether you’re a seasoned Power BI user or a BI manager looking to elevate your team’s capabilities, this session will provide you with the insights and tools necessary to achieve a clean and effective Power BI environment.

Webinar: Kick-start your Data Science project

You have formulated a solid business case for your Data Science project. Congratulations! But what’s next? In this webinar, we will give you an overview of the steps to take in your Data Science project. We will also show you which technologies you can best use for your project.

Webinar: How to provide your organization with the right data?

Choosing the right Data Warehousing solution for your organization is a daunting task. What to look out for? What are the pros and cons of different solutions? Don’t worry! During this webinar, we’ll give you an easy-to-understand overview of the different solutions on the market.

Microsoft Fabric

Software as a Service (SaaS)

OneLake

Copilot

What is Microsoft Fabric?

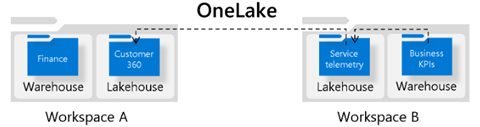

Microsoft Fabric is Microsoft’s new solution, integrating multiple services that can be used across your organization’s data pipeline. This enables a more fluent collaboration across your data-oriented activities, from data engineering and data science to data visualization. The foundation of Fabric is OneLake, the single data lake to which all data across your organization is stored. Having only one place where all data is stored in the same format improves computing efficiency and flexibility, removes data duplication and eliminates limitations due to data silos. Each domain/team can work from their own workspace, located within OneLake. They can use any computing engine of their liking, store their data to its necessary format, using a data Warehouse or Lakehouse, and use any application to maximize the insights of their data.

Together with Microsoft Fabric, the AI powered Copilot is introduced. It uses Large Language Models to assist users of any application. By typing any request in natural language, Copilot suggests clear and concise actions in the form of code destined for Notebooks, visuals and reports in Power BI or data integration plug-ins.

What does Fabric offer?

Fabric is a Software as a Service. This means that Fabric is designed to remove the complexity of integrating all data activities within an organization. Simply put, data stored in Workspace B can be accessed in Workspace A via a shortcut between the Lakehouses. This prevents data duplication and improves storage performance. As a result of standardizing the storage of data and combining Data Warehousing, Data Engineering, Data Factory, Data Science, Real Time Analytics and Power BI, collaboration between teams/members is seamless and easier than ever before. This also comes with unified governance principles and computing resource purchases, making them both more efficient for your organization as a whole. Data sensitivity labels will therefore be standardized across domains and data lineage can be tracked across the data pipeline. To empower every business user, it is possible to integrate all insights discovered in Fabric to Office tools. Sharing the results of your Data Science project via mail or sharing your new Power BI report during a Teams meeting is possible with a single click.

Copilot

Copilot offers all Fabric users the possibility to generate deeper insights into their data. Even inexperienced users are able to successfully complete tasks like data transformations or creating summarizing visuals with help from copilot. However, be cautious with using Copilot. Since it’s an AI powered service, its answers depend on your personal input and its answers should always be critically looked at.

Advantages of fabric

- Increase in collaboration with other teams

- Seamless integration of services

- Scalability

- Cost Efficiency

- User-friendly experience

- One Copy Principle

Want to know more about Microsoft Fabric?

Want to know more about Microsoft Fabric as a service? Take a look at the Fabric Masterclass that dives deep into different Fabric use cases for Data Science, Data Engineering, and Data Visualization.

Want to know more about Microsoft Fabric?

Data Engineering in Microsoft Fabric

In Microsoft Fabric, data engineering plays a pivotal role to empower users to architect, construct, and upkeep infrastructures that facilitate seamless data collection, storage, processing, and analysis for their organizations.

Data Science in Microsoft Fabric

Microsoft Fabric is a platform that offers Data Science solutions to empower users to complete end-to-end data science workflows for data enrichment and business insights. The platform supports a wide range of activities across the entire data science process, from data exploration, preparation, and cleansing to experimentation, modeling, model scoring, and serving predictive insights.

Data Visualization in Microsoft Fabric

By seamlessly integrating with Power BI, Microsoft Fabric revolutionizes how you work with analytics.

Data Engineering in Microsoft Fabric

In Microsoft Fabric, data engineering plays a pivotal role to empower users to architect, construct, and upkeep infrastructures that facilitate seamless data collection, storage, processing, and analysis for their organizations.

Data Engineering in Microsoft Fabric encompasses three main components:

- Data Engineering: This aspect involves collecting data from various sources, processing it in real-time or batch mode, and transforming it into actionable insights. Microsoft Fabric’s Data Engineering ensures scalability and flexibility, making it suitable for handling diverse data challenges.

- Data Factory: As a fully managed data integration service, Data Factory enables the creation and orchestration of complex data workflows. It allows seamless data movement and transformation across different sources and destinations, simplifying data processing and ensuring efficiency.

- Data Warehousing: Provides a centralized repository for structured and unstructured data. It offers scalable and secure storage, ensuring efficient query performance and easy access to critical data.

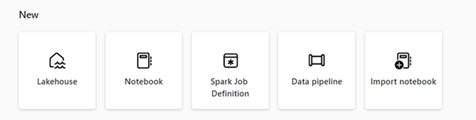

These three main components are in turn made accessible through key features available from the data engineering homepage that include:

- Lakehouse creation and management where users can oversee their data, providing a unified repository storage and processing.

- Pipeline design, enabling efficient data copying into their Lakehouse.

- Spark job definitions to easily submit batch and streaming jobs to the Spark cluster for processing and analysis.

- Notebooks to craft code for data ingestion, preparation, transformation, and streamlining data engineering workflows.

Business case:

In the dynamic world of retail, a savvy company harnesses Microsoft Fabric’s array of tools to revolutionize their data landscape. OneLake, their all-encompassing data repository, harmonizes sales, inventory, and customer data streams. By orchestrating seamless data pipelines, they channel diverse information into OneLake with precision. Spark job definitions section empowers you to do real-time analysis, unraveling intricate sales trends and highlighting inventory fluctuations. The utilization of interactive notebooks within this ecosystem streamlines data engineering, refining information for impactful Power BI reports. This seamless integration fuels agile decision-making, propelling the retail venture toward strategic brilliance amidst a competitive market.

Want to know more about Microsoft Fabric?

Want to know more about Microsoft Fabric as a service? On October 26 we’re organizing a Fabric Masterclass that dives deep into different Fabric use cases for Data Science, Data Engineering, and Data Visualization.

Want to know more about Microsoft Fabric?

Data Visualization in Microsoft Fabric

By seamlessly integrating with Power BI, Microsoft Fabric revolutionizes how you work with analytics.

Data Science in Microsoft Fabric

Microsoft Fabric is a platform that offers Data Science solutions to empower users to complete end-to-end data science workflows for data enrichment and business insights. The platform supports a wide range of activities across the entire data science process, from data exploration, preparation, and cleansing to experimentation, modeling, model scoring, and serving predictive insights.

Data Science in Microsoft Fabric

Microsoft Fabric is a platform that offers Data Science solutions to empower users to complete end-to-end data science workflows for data enrichment and business insights. The platform supports a wide range of activities across the entire data science process, from data exploration, preparation, and cleansing to experimentation, modeling, model scoring, and serving predictive insights.

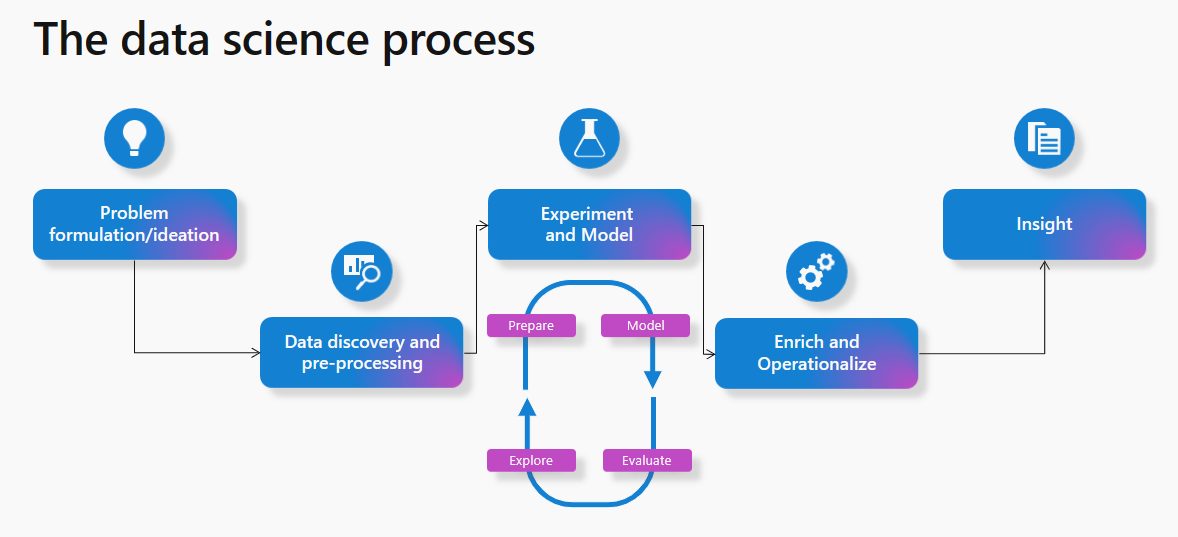

The typical data science process in Microsoft Fabric involves the following steps:

- Problem formulation and ideation: Data Science users in Microsoft Fabric work on the same platform as business users and analysts, which makes data sharing and collaboration seamlessly between different roles.

- Data Discovery and pre-processing: Users can interact with data in OneLake using the Lakehouse item. There are also tools available for data ingestion and data orchestration pipelines.

- Experimentation and ML modeling: Microsoft Fabric offers capabilities to train machine learning models using popular libraries like PySpark (Python), SparklyR (R), and Scikit Learn. It also provides a built-in MLflow experience for tracking experiments and models.

- Enrich and operationalize: Notebooks can handle machine learning model batch scoring with open-source libraries for prediction, or the Microsoft Fabric scalable universal Spark Predict function.

- Gain insights: Predicted values can easily be written to OneLake, and seamlessly consumed from Power BI reports.

Business case:

The financial department of your organization already uses Power BI within Fabric to visualize their data. Now, they would like to use machine learning to generate a cashflow forecast. The data is already in a Lakehouse in OneLake and does therefore not have to be moved or copied. It can directly be used in Synapse Data Science to preprocess the data and perform exploratory data analysis using notebooks. Then, model experimentation can start within the notebooks, tracking important metrics using the built-in MLflow capabilities. After landing on a model that performs well, a cashflow forecast can be generated and written back to the Lakehouse, ready to be visualized within Power BI. The notebooks can then be scheduled to automatically generate a monthly cashflow forecast.

Synapse Real-Time Analytics in Fabric

Microsoft Fabric can also serve as a powerful tool for real-time data analytics, featuring an optimized platform tailored for streaming and time-series data analysis. It is thoroughly designed to streamline data integration and facilitate rapid access to valuable data insights. This is achieved through automatic data streaming, indexing, and data partitioning, all of which are applicable to various data sources and formats. This platform proves to be particularly well-suited for organizations seeking to elevate their analytics solutions to a larger scale, all the while making data accessible to a diverse spectrum of users. These users span from citizen data scientists to advanced data engineers, thus promoting a democratized approach to data utilization.

Key features of Real-Time Analytics include:

- Capture, transform, and route real-time events to various destinations, including custom apps.

- Ingest or load data from any source, in any data format.

- Run analytical queries directly on raw data without the need to build complex data models or create scripting to transform the data.

- Import data with by-default streaming that provides high performance, low latency, high freshness data analysis.

- Work with versatile data structures including query structured, semi-structured, or free text.

- Scale to an unlimited amount of data, from gigabytes to petabytes, with unlimited scale on concurrent queries and concurrent users.

- Integrate seamlessly with other experiences and items in Microsoft Fabric.

Want to know more about Microsoft Fabric?

Want to know more about Microsoft Fabric as a service? On October 26 we’re organizing a Fabric Masterclass that dives deep into different Fabric use cases for Data Science, Data Engineering, and Data Visualisation.

Want to know more about Microsoft Fabric

Data Visualization in Microsoft Fabric

By seamlessly integrating with Power BI, Microsoft Fabric revolutionizes how you work with analytics.

Data Engineering in Microsoft Fabric

In Microsoft Fabric, data engineering plays a pivotal role to empower users to architect, construct, and upkeep infrastructures that facilitate seamless data collection, storage, processing, and analysis for their organizations.

Data Visualization in Microsoft Fabric

By seamlessly integrating with Power BI, Microsoft Fabric revolutionizes how you work with analytics.

There are several benefits to choosing Fabric on top of Power BI. Here are some points that highlight the advantages:

- Effective Data Accessibility: Microsoft Fabric promotes the concept of having easy access to a unified and centralized data environment (One Lake). As the name suggests, it is a single storage account for all your data, logical “Data Lake” backing all your Fabric workloads. It is the storage account for all your data used in Fabric. This way, the centralized information data can be analyzed and interpreted from multiple sources in a faster and more efficient way to make informed decisions and create valuable insights.

- Data Governance: Microsoft Fabric provides robust data governance capabilities, ensuring that the data is accurate and reliable. This promotes data integrity, compliance and proper data stewardship, enabling analysts to show trustworthy and high-quality data in reports.

- Integration with Business Intelligence Tools: Microsoft Fabric integrates popular business intelligence tools, such as Power BI. This integration empowers leveraging BI skills and work within preferred toolsets whilst still benefiting from the unified data environment.

- Collaborative Analytics: The ability to share visualized data and insights with stakeholders across the organization promotes a data-driven culture and facilitates better decision-making throughout the business.

- Self-Service Analytics: Microsoft Fabric empowers self-sufficient analysis. The platform’s intuitive interfaces and self-service capabilities realizes independent access, manipulation and data analyzation, reducing our reliance on IT teams for data-related tasks.

Copilot

Co-Pilot is an AI-powered capability that plays a significant role on this topic because it helps on the following:

- Create insights and report generation

- Create narrative summaries

- Help writing and editing DAX calculations

This means that you will have the flexibility to, via natural language, look at and analyze any data in any way to gain new insights.

Business case:

Imagine, that you need to create a Sales Report and you are not sure how to translate it into analysis, you can simply write a question on Co-Pilot such as:

“Build a report summarizing the sales growth rate of the last 2 years”

With this simple question, Co-Pilot will retrieve suggestions that answer your needs! It is able to suggest new visuals, implement changes or create drilldowns to existing visuals or even design complete report pages from scratch.

More updates on the horizon

It is expected that Fabric will continue to evolve by integrating more technologies and features. This will ensure that Power BI remains a robust and versatile tool that gives its users more opportunities to create insights and make data-driven decisions.

Want to know more about Microsoft Fabric?

Want to know more about Microsoft Fabric as a service? On October 26 we’re organizing a Fabric Masterclass that dives deep into different Fabric use cases for Data Science, Data Engineering, and Data Visualisation.

Want to know more about Microsoft Fabric?

Data Science in Microsoft Fabric

Microsoft Fabric is a platform that offers Data Science solutions to empower users to complete end-to-end data science workflows for data enrichment and business insights. The platform supports a wide range of activities across the entire data science process, from data exploration, preparation, and cleansing to experimentation, modeling, model scoring, and serving predictive insights.

Data Engineering in Microsoft Fabric

In Microsoft Fabric, data engineering plays a pivotal role to empower users to architect, construct, and upkeep infrastructures that facilitate seamless data collection, storage, processing, and analysis for their organizations.

Boost your business efficiency with lowcode

In the fast-paced and highly competitive world of business, standing out is no longer just a desire, but a necessity. That’s exactly why organizations are constantly seeking innovative solutions to streamline their processes and increase efficiency. Traditionally, organizations leverages hardcore-IT in order to become more efficient. However, this solution is time-consuming and, more important, IT-talents are a rare find. This combination often results in a flooding backlog and a business having a hard time to stand out. If you are looking for a game-changing solution that can boost your business’s efficiency and productivity, lowcode might be your answer.

At the end of 2025, 41% of all employees – IT-employees not calculated – will be able to independently develop and modify applications. – Gartner, 2022

What is lowcode?

Low-code is a development approach to application development. It enables developers of diverse experience levels to create applications for web and mobile, using drag-and-drop components. These platforms liberate non-technical developers from the need to write code, while still receiving assistance from seasoned professionals. With lowcode we develop applications within a fraction of the time it usually took. But you can do a whole lot more with lowcode. For example, you don’t always need to create a completely new application. Sometimes, rebuilding a part of a slow, outdated or complicated application to a more stable and smarter version is a better option. Or you can harness the power of low-code technology to creatively link previously unconnected systems or merge various data sources in innovative ways. By doing so, you can utilize lowcode to create new data and insights you never expected.

Why use lowcode?

There are several reasons to start lowcode development today rather than tomorrow. Let me explain the best arguments:

- Rapid development

By using drag-and-drop interface and pre-built components, you can speed up application delivery 40% / 60% faster compared to traditional code. - Relieve pressure on IT staff

Lowcode allows business users to develop applications themselves. This means that citizen developers can develop and maintain applications while your IT-staff can focus on their own tasks. - Easy integration

Lowcode platforms offer pre-built connectors and API’s, enabling smooth integrations between various applications. - Enhanced user experience

Whether it is developers, business analysts or subject matter experts, everybody can contribute to the application development process. Break down silos, foster teamwork, and unlock the full potential of your workforce. Co-create an app that makes your colleagues happy. - Cost Saving

Low-code platforms reduces costs by accelerating development, minimizing the need for specialized developers, and eliminating the need for extensive coding. Achieve more with less! - Increased agility

With the ability to make changes on the fly, you can respond swiftly to feedback, market shifts, and emerging opportunities.

An example of lowcode at its bests

Before the COVID-19 pandemic, most companies did not have a desk reservation system in place, and employees would simply choose any available desk. However, with the arrival of COVID-19 and the need for social distancing, many companies began to see the advantages of a desk-reservation system.

While it could take more than a month to build such desk-reservation system leveraging traditional code, lowcode solutions solved this challenge within two weeks – from initial idea to go-live.

Build it fast, build it right, build it for the future

As with traditional development, it is important to make sure you build your lowcode solutions right. It means that because your development approach is much faster, it is especially important to maintain the best practices to keep control over your lowcode landscape. For example, you don’t want:

- Duplicate code, apps or modules

- Uncontrollable sprawl of applications

- Data Exposure

- A bad fit between processes and applications

To summarize: think before you start. Ultimately, governance empowers businesses to maximize the benefits of lowcode development while mitigating risks and ensuring long-term success.

Lowcode vs No-code

Although lowcode and no-code have a really different approach in accelerating software development, they are often grouped under the same concept. This is a common misconception because both approaches bring different pros and cons to the table. Simply put, both lowcode and no-code aim to speed up development and reduce the reliance on traditional coding practices, but the difference is in how much coding is possible with either of the two approaches.

As explained in this blog, lowcode still requires some coding, but the complexity is reduced. No-code development on the other hand, eliminates the need for coding altogether by providing a drag-and-drop interface to create applications using pre-built blocks. It focuses on empowering non-technical users to build applications. The main difference between lowcode and no-code is that lowcode accelerates ‘traditional’ development by giving the user a lot of customization options with little performance drain, while no-code is even faster for development. However, by developing faster, you trade in some performance and customization options.

Lowcode and no-code at Rockfeather

At Rockfeather we aim to develop the best applications for our customers. Therefore, we primarily use lowcode to build quality solutions. Depending on the challenge, we decide if Microsoft PowerApps or Outsystems is a better fit for you. OutSystems is a lowcode platform that offers advanced customizations and scalability. OutSystems promises to help developers build applications that are 4-10 times faster compared to traditional development, without trading in performance. PowerApps, the other platform that we offer, is a tool that has lowcode and no-code capabilities. The platform allows its users to create applications with little to no coding experience. PowerApps has a visual development interface (no-code), but also offers the option for more experienced developers to extend functionalities through custom code (lowcode).

Are you interested in knowing more about lowcode? And do you want to know more about the differences between PowerApps and OutSystems? Than subscribe to our webinar that deep-dives into the unique features of both platforms.

Deep Dive Outsystems vs PowerApps - Webinar

In this webinar, our experts compare the two solutions on four aspects. In about 30 minutes, you will learn the difference between Power Apps and Outsystems. Don’t miss out and sign up for this Deep Dive!

Webinar: What are the conclusions on the 2023 Gartner Magic Quadrant on Analytics & Business Intelligence?

Looking for a Data Visualization solution? The Gartner Magic Quadrant on BI tools, is a report of a whopping 125 pages that lists all the platforms. Are you having a hard time dissecting every detail in the report? Don't worry, our experts delved into every little detail!

BI Professionals always look forward to the Gartner Magic Quadrant for Business Intelligence & Analytics, since it’s one of the leading studies in the BI space. But how do you get the essentials from this 125-page report? And how independent and reliable is Gartner’s research really? What are the most important trends in Business Intelligence for 2023?

In about half an hour, our experts bring you up to speed on the most important findings of the new Gartner Magic Quadrant. We also discuss the most important trends for the coming year.

What will you learn in this webinar?

- The key takeaways from the Gartner Magic Quadrant for Business Intelligence & Analytics report

- Most interesting new insights for the coming year

Watch the webinar!

By filling in this form you will be able to access the webinar immediately.