Boost your business efficiency with lowcode

In the fast-paced and highly competitive world of business, standing out is no longer just a desire, but a necessity. That’s exactly why organizations are constantly seeking innovative solutions to streamline their processes and increase efficiency. Traditionally, organizations leverages hardcore-IT in order to become more efficient. However, this solution is time-consuming and, more important, IT-talents are a rare find. This combination often results in a flooding backlog and a business having a hard time to stand out. If you are looking for a game-changing solution that can boost your business’s efficiency and productivity, lowcode might be your answer.

At the end of 2025, 41% of all employees – IT-employees not calculated – will be able to independently develop and modify applications. – Gartner, 2022

What is lowcode?

Low-code is a development approach to application development. It enables developers of diverse experience levels to create applications for web and mobile, using drag-and-drop components. These platforms liberate non-technical developers from the need to write code, while still receiving assistance from seasoned professionals. With lowcode we develop applications within a fraction of the time it usually took. But you can do a whole lot more with lowcode. For example, you don’t always need to create a completely new application. Sometimes, rebuilding a part of a slow, outdated or complicated application to a more stable and smarter version is a better option. Or you can harness the power of low-code technology to creatively link previously unconnected systems or merge various data sources in innovative ways. By doing so, you can utilize lowcode to create new data and insights you never expected.

Why use lowcode?

There are several reasons to start lowcode development today rather than tomorrow. Let me explain the best arguments:

- Rapid development

By using drag-and-drop interface and pre-built components, you can speed up application delivery 40% / 60% faster compared to traditional code. - Relieve pressure on IT staff

Lowcode allows business users to develop applications themselves. This means that citizen developers can develop and maintain applications while your IT-staff can focus on their own tasks. - Easy integration

Lowcode platforms offer pre-built connectors and API’s, enabling smooth integrations between various applications. - Enhanced user experience

Whether it is developers, business analysts or subject matter experts, everybody can contribute to the application development process. Break down silos, foster teamwork, and unlock the full potential of your workforce. Co-create an app that makes your colleagues happy. - Cost Saving

Low-code platforms reduces costs by accelerating development, minimizing the need for specialized developers, and eliminating the need for extensive coding. Achieve more with less! - Increased agility

With the ability to make changes on the fly, you can respond swiftly to feedback, market shifts, and emerging opportunities.

An example of lowcode at its bests

Before the COVID-19 pandemic, most companies did not have a desk reservation system in place, and employees would simply choose any available desk. However, with the arrival of COVID-19 and the need for social distancing, many companies began to see the advantages of a desk-reservation system.

While it could take more than a month to build such desk-reservation system leveraging traditional code, lowcode solutions solved this challenge within two weeks – from initial idea to go-live.

Build it fast, build it right, build it for the future

As with traditional development, it is important to make sure you build your lowcode solutions right. It means that because your development approach is much faster, it is especially important to maintain the best practices to keep control over your lowcode landscape. For example, you don’t want:

- Duplicate code, apps or modules

- Uncontrollable sprawl of applications

- Data Exposure

- A bad fit between processes and applications

To summarize: think before you start. Ultimately, governance empowers businesses to maximize the benefits of lowcode development while mitigating risks and ensuring long-term success.

Lowcode vs No-code

Although lowcode and no-code have a really different approach in accelerating software development, they are often grouped under the same concept. This is a common misconception because both approaches bring different pros and cons to the table. Simply put, both lowcode and no-code aim to speed up development and reduce the reliance on traditional coding practices, but the difference is in how much coding is possible with either of the two approaches.

As explained in this blog, lowcode still requires some coding, but the complexity is reduced. No-code development on the other hand, eliminates the need for coding altogether by providing a drag-and-drop interface to create applications using pre-built blocks. It focuses on empowering non-technical users to build applications. The main difference between lowcode and no-code is that lowcode accelerates ‘traditional’ development by giving the user a lot of customization options with little performance drain, while no-code is even faster for development. However, by developing faster, you trade in some performance and customization options.

Lowcode and no-code at Rockfeather

At Rockfeather we aim to develop the best applications for our customers. Therefore, we primarily use lowcode to build quality solutions. Depending on the challenge, we decide if Microsoft PowerApps or Outsystems is a better fit for you. OutSystems is a lowcode platform that offers advanced customizations and scalability. OutSystems promises to help developers build applications that are 4-10 times faster compared to traditional development, without trading in performance. PowerApps, the other platform that we offer, is a tool that has lowcode and no-code capabilities. The platform allows its users to create applications with little to no coding experience. PowerApps has a visual development interface (no-code), but also offers the option for more experienced developers to extend functionalities through custom code (lowcode).

Are you interested in knowing more about lowcode? And do you want to know more about the differences between PowerApps and OutSystems? Than subscribe to our webinar that deep-dives into the unique features of both platforms.

Deep Dive Outsystems vs PowerApps - Webinar

In this webinar, our experts compare the two solutions on four aspects. In about 30 minutes, you will learn the difference between Power Apps and Outsystems. Don’t miss out and sign up for this Deep Dive!

Webinar: What are the conclusions on the 2023 Gartner Magic Quadrant on Analytics & Business Intelligence?

Looking for a Data Visualization solution? The Gartner Magic Quadrant on BI tools, is a report of a whopping 125 pages that lists all the platforms. Are you having a hard time dissecting every detail in the report? Don't worry, our experts delved into every little detail!

BI Professionals always look forward to the Gartner Magic Quadrant for Business Intelligence & Analytics, since it’s one of the leading studies in the BI space. But how do you get the essentials from this 125-page report? And how independent and reliable is Gartner’s research really? What are the most important trends in Business Intelligence for 2023?

In about half an hour, our experts bring you up to speed on the most important findings of the new Gartner Magic Quadrant. We also discuss the most important trends for the coming year.

What will you learn in this webinar?

- The key takeaways from the Gartner Magic Quadrant for Business Intelligence & Analytics report

- Most interesting new insights for the coming year

Watch the webinar!

By filling in this form you will be able to access the webinar immediately.

Watch this webinar now!

Webinar: What are the best Data Engineering tools of 2023?

What are the best Data Engineering tools of 2023? Every year, BARC publishes a report featuring various Data Engineering technologies and evaluates these tools on various aspects. In half an hour, we'll be breaking down the most interesting aspects of this report and bring you up to speed on the latest trends.

Within Data Engineering, there are various technologies that you can use within your organization. But which technologies keep up with developments, and what are the latest trends in the Data Engineering space?

Every year, BARC writes a data management summary with various technology and reviews them on different aspects. We reviewed this report for you and will tell you all the interesting details. Fill out the form below and know the most interesting trends and technologies in the field of Data Engineering.

What will you learn in this webinar?

- The key takeaways from the BARC report

- Most interesting new insights

Watch the webinar

Watch the webinar

Webinar: What are the best Low Code apps in 2023?

Looking for a low-code way to do application development? The Gartner Magic Quadrant on Low Code platforms is a report of, a whopping 125 pages that lists all the platforms. Are you having a hard time dissecting every detail in the report? Don't worry, our experts delved into every little detail!

In the report “Magic Quadrant for Enterprise Low-Code Application Platform,” Gartner explains the differences between various Low Code platforms. But how do you get the important information from this 125-page report? Luckily, you don’t have to do this yourself. In half an hour, our Low Code specialist will update you on the most important developments and trends for the upcoming year.

What will you learn in this webinar?

- The key takeaways from Gartner’s report: Magic Quadrant for Enterprise Low-Code Application Platform

- Most interesting new insights

Watch the webinar

You can now watch the webinar!

Databricks for Data Science

Picture this: your business is booming, and you need to make informed decisions quickly to stay ahead of the competition. But forecasting can be a tedious and time-consuming process, leaving you with less time to focus on what’s important. That's where Azure Databricks comes in! We used this powerful technology to automate our internal forecasting process and save precious time. In this blog post, we'll show you the steps we took to streamline our workflow and make better decisions with confidence. So make yourself a coffee and enjoy the read!

Initial Setup

Before we dive into the nitty-gritty of coding with Databricks, there are a few important setup steps to take.

First, we created a repository on Azure DevOps, where we could easily track and assign tasks to team members, make comments on specific items, and link them to our Git commits. This helped us stay organized and focused on our project goals.

Next, we set up a new resource group on Azure with three resources: Azure Databricks Services, a blob storage account, and a key vault. Although we already have clean data in our Rockfeather Database (thanks to our meticulous data engineers), we wanted to keep our intermediate files separately in this resource group to ensure version control and maintain a clean workflow. Within our blob storage, we created containers to store our formatted historical actuals, exogenous features, and predictions.

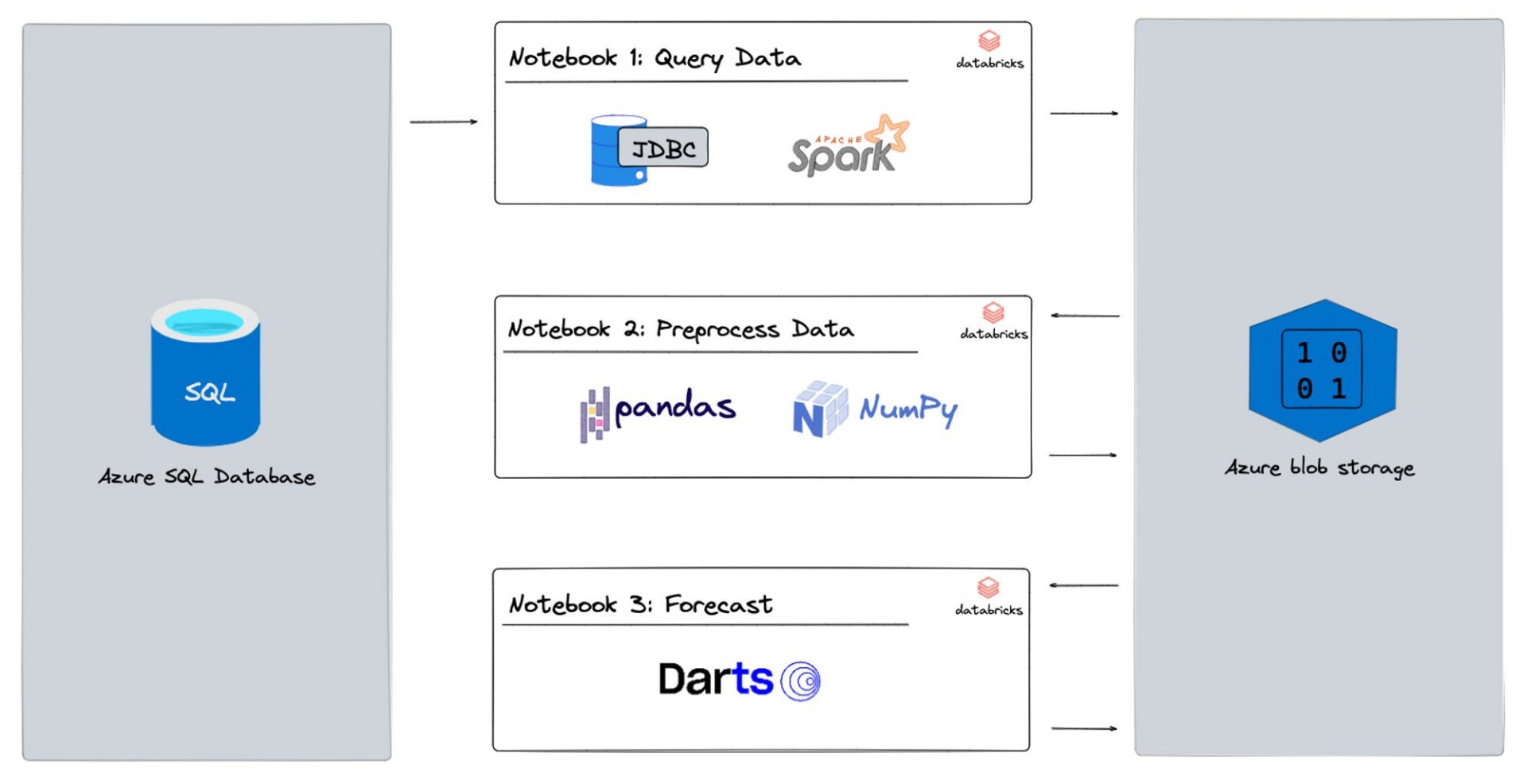

Finally, we sketched out a high-level project architecture to get a bird’s-eye view of the project. This helped us align on our deliverables and encouraged discussion within the team about what a realistic outcome would look like. By taking these initial setup steps, we were able to hit the ground running with Databricks and tackle our forecasting process with confidence.

Project Architecture

Moving on to Databricks

Setting up Azure Databricks

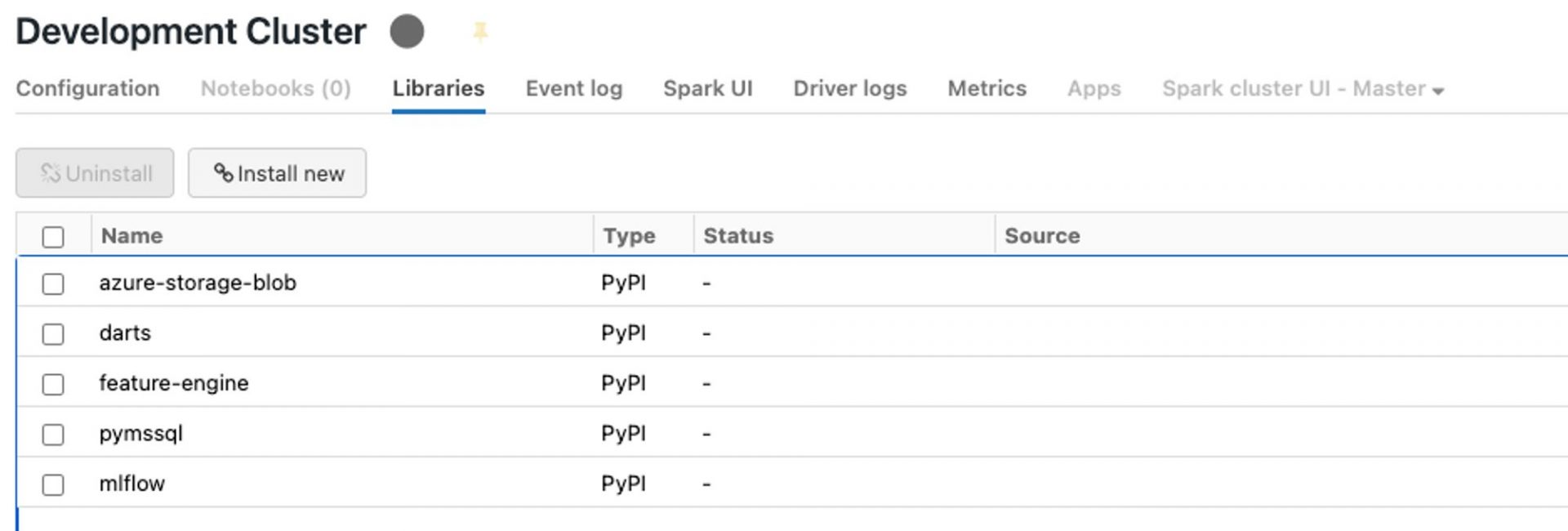

Now we’re ready to dive into Databricks and its sleek interface. But before we start coding, there are a few more setup steps to take.

First, we want to link the Azure DevOps repo we set up earlier to Databricks. Kudos to Azure Databricks, the integration between these two tools is seamless! To link the repo, we simply go to User Settings > Git Integration and drop the repo link there. For more information, check out this link.

To keep our database and blob storage keys and passwords secure, we use the key vault to store our secrets, which we link to Databricks. You can read more about that here!

Lastly, we need to create a compute resource that will run our code. Unlike Azure Machine Learning, Databricks doesn’t have compute instances, only clusters. While this means it takes a minute to spin up the cluster, we don’t have to worry about forgetting to terminate it since it automatically does so after a pre-defined time period of inactivity! It’s also super easy to install libraries on our compute: just head over to the Libraries tab and click on “⛓️ Install new”!

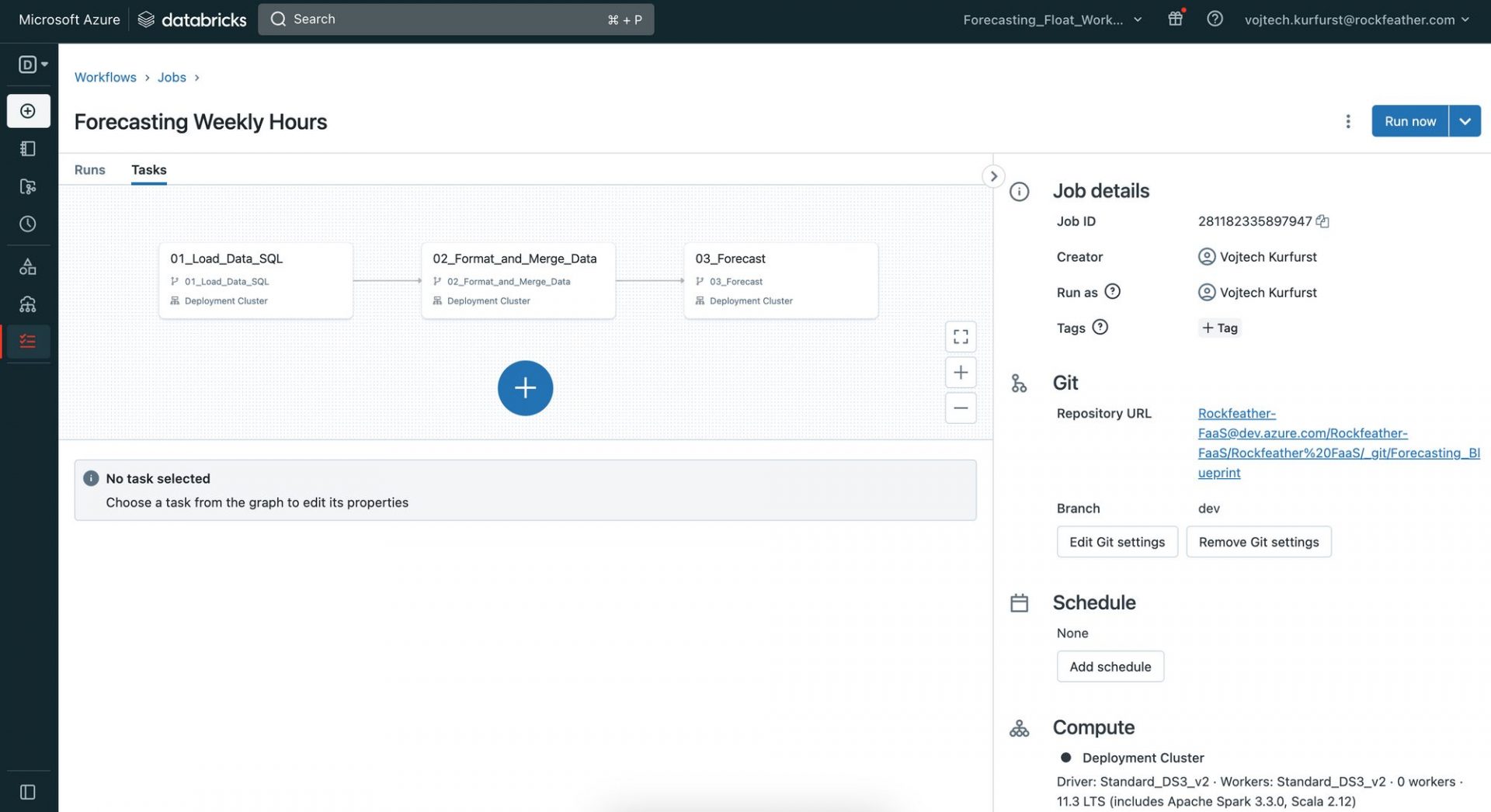

From Database Query to Forecast

Now that our setup is complete, we move on to using Databricks notebooks for data loading, data engineering, and forecasting. We break this down into three sub-sections:

- Query Data: We create a notebook which reads the formatted data from the blob storage containers we created earlier. We then transform this data and create data frames for further data engineering.

- Preprocess Data: Here, we perform data cleaning, feature engineering, and data aggregation on our data frames to get them into a shape suitable for forecasting. This is also the place to apply transformations such as normalisation, scaling, and one-hot encoding to prepare our data for modelling.

- Forecast: We use machine learning models such as Random Forest, Gradient Boosting, and Time Series models to forecast future values based on our prepared data. For our baseline model, we use the classic Exponential Smoothing model. We then store these predictions back in our blob storage for further analysis and visualisation.

By breaking down the process into these three sub-sections, we can work more efficiently and focus on specific tasks without getting overwhelmed. As shown in the Project Architecture above, each step is one notebook. Let’s have a closer look at each notebook.

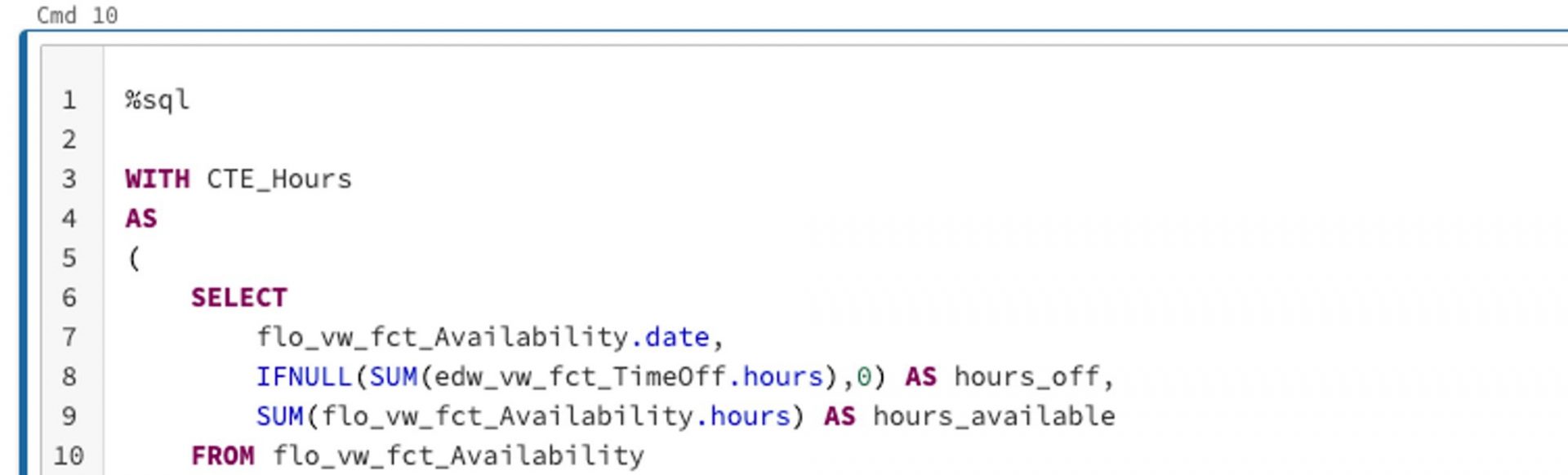

Notebook 1: Query Data

The code we’ll be writing in Databricks is pretty much in standard notebook format, which is familiar territory to all data scientists. Our data engineers in the audience will also appreciate that we can write, for example, SQL code in the notebook. All we have to do is include the %sql magic command (link: https://docs.databricks.com/notebooks/notebooks-code.html) at the beginning of the cell, as shown below. We use this approach for our first notebook, where we query the data we need. In our case here, it’s past billable hours and the available hours of our lovely consultants. Once we have the data we need, aggregated to the right level, we save it in our blob storage for the next step.

Notebook 2: Preprocess data

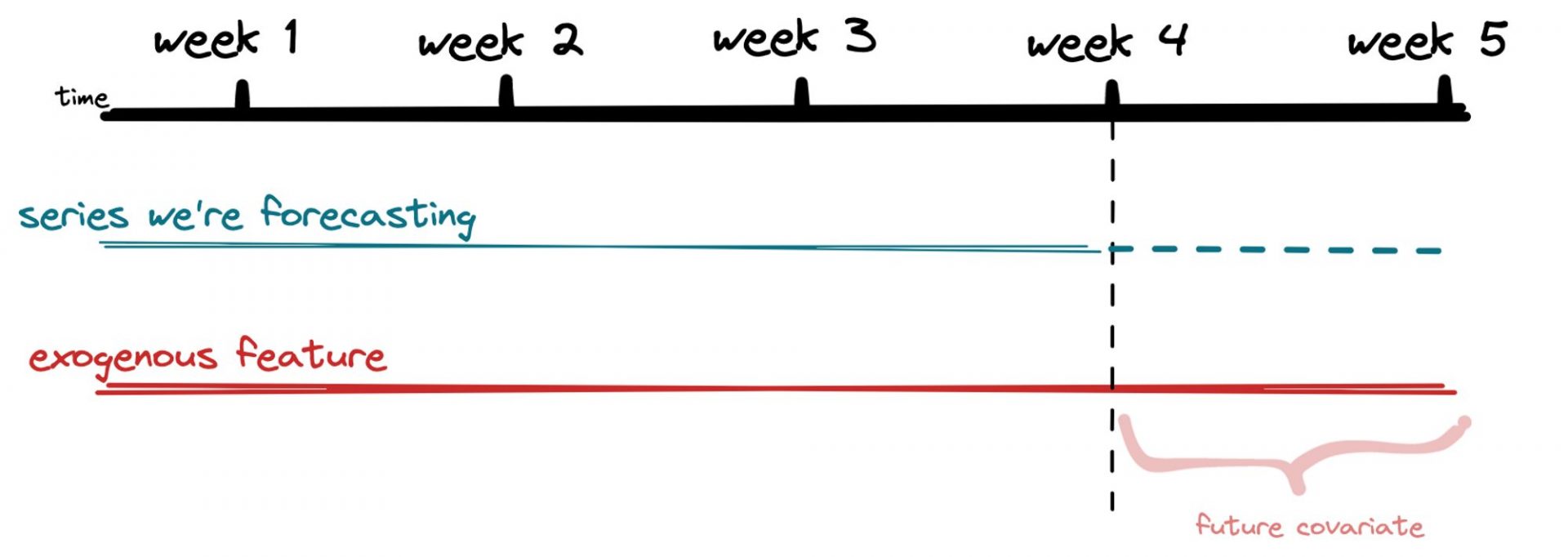

In Notebook 2, we use pandas and numpy to preprocess our data and do our feature engineering. We need to make sure we have all the data on the features we’ve identified for the whole forecast period before we start training any models. For example, if we’re forecasting billable hours and using total available hours of our consultants as an exogenous feature, we need to have that data available for the entire forecast period. While this may seem obvious in this case, it’s an important step to take before we jump into any modelling! Once we’ve got our data formatted the way we want it, we save it back to blob storage and move on to the next notebook.

Notebook 3: Forecast

Here’s where the magic happens – we finally get to do some forecasting! We use the darts package to train multiple models and compete against our baseline Exponential Smoothing Model. We love this package because it’s super easy to use and makes backtesting a breeze. To evaluate model accuracy, we use the mean absolute percentage error (MAPE) – it’s a simple metric that’s easy to understand.

We try out different models like linear regression, random forest, and XGBoost and compare their performance against our baseline. Our baseline model had an MAPE of 44%, which isn’t great, but we’re not deterred. By adding in our single exogenous feature and leveraging our three ML models, we were able to decrease our MAPE to 12% – a huge improvement! And of course, we save our results back to blob storage for future reference.

With our forecasting pipeline up and running in Databricks, we can sit back and watch the predictions roll in. It’s amazing what you can do with a little data and a lot of creativity!

💡 Forecast backtesting is a method used to check how accurate a forecast is by comparing its predictions with what actually happened. This helps identify any errors or biases in the forecasting model, which can be used to improve future predictions. It’s a useful tool in many industries, such as finance or weather forecasting, and helps decision-makers make better-informed decisions.

Our thoughts on Databricks

We have got to give props to Databricks, it’s a tool that makes our lives easier. It’s like having a Swiss army knife in your pocket – it’s slim, versatile, and gets the job done. We love the collaboration feature – we can code with our team, and it’s like a real-time jam session. Plus, setting up and scheduling pipelines is as smooth as butter. The best part is the seamless integration with mlflow and PySpark – it’s like having your favorite sauce on your favorite dish. Let’s just say that Databricks has been a game-changer for us, and we’re excited to see what new features they’ll cook up in the future!

Next Steps

Although we’ve got a pipeline set up, our forecasting journey still has an exciting ride ahead. That’s always how it is with data science projects. The next step is generating maximum value from our forecasts.

We are discussing with our Data Viz team how best to integrate this forecast into our dashboards. Also, we’re scheduling meetings with our CFO to see how exactly we can make his job easier by, for example, introducing more exogenous features or reporting historical accuracy.

As a data-thinking organization, we’re committed to becoming more anti-fragile, and we treat our customers the same way. This means building resilience into our forecasting models to ensure they can withstand unexpected events and continue providing reliable forecasts. If you found this post inspiring and would like to know more, don’t hesitate to reach out!

Webinar: What are the best Data Science tools in 2023?

Where should you start when looking for a Data Science & Machine Learning solution? What information is important? And especially which sources are the most reliable? Our expert goes over these questions in just half an hour. Sign up now!

Choosing a Data Science solution for your business is a difficult task. Our Data Science experts have looked into all the options and will be talking about the best tools and the newest trends in the Data Science & Machine Learning market. All in a short and sweet 30 minute webinar. They’ll also tell you everything you need to think about when picking a tool and what the pros and cons of the most commonly used tools are.

What will you learn in this webinar?

- The best Data Science and Machine Learning tools on the market

- The most interesting new trends in the market

Fill out this form and view the webinar right away!

You can now view the webinar

What if your dashboards turn out to be misleading?

An effective dashboard is a tool for getting clear insights into your data. But what if a dashboard is less effective than imagined? Or even worse; what if your dashboards mislead users? In this blog, we discuss two new features of Power BI. One can mislead your users, while the other makes your dashboards more effective.

A highly requested solution with undesirable side effects.

The first update we want to highlight is an update that allows small multiples to be turned off on the shared axis. A common complaint among Power BI users was “due to the shared axis, I can’t see smaller values properly anymore”. This update does make that possible, however how useful is this turning off the shared axis really?

Let’s see what happens when we turn off the shared axis.

As you can see, it is not very easy to tell which product group is the largest if the y-axes are not equal. In fact, at first glance, it all looks the same. Only when you look longer do you see that the axes are not equal.

We believe that you should always keep the axes equal, and so even if they are small values. After all, you are comparing different segments. But then how can you visualize small multiples, while also making it easy to read? It’s simpler than it sounds, take a look:

In this case, we recommend using Zebra BI, a plug-in for BI tools such as Power BI. By using this plug-in, the small multiples are placed in boxes of different sizes depending on the values. This allows users to properly compare the segments and thus draw the correct conclusion, as they are not misled by different axes. Sounds useful right?

An update that simplifies reading a report

A feature from the same update that does make Power BI dashboards more effective, is the addition of Dynamic Slicers. With Dynamic Slicers, you can use field parameters to dynamically change the measurements or dimensions analyzed within a report. But why is this so useful?

With Dynamic Slicers, you can help readers of a BI report explore and customize the report, so they can use the information that is useful for their analysis. As shown in the GIF above, a user can filter by Customers, Product Family, Product Group, or other slicers that you’ve set up in the report.

In addition, you can parameterize slicers further to support dynamic filtering scenarios. In the GIF below, you can see how it works. You can see that the value comes from the dynamic slicer and changes dynamically. This gives your users even more opportunities to interact with the dashboard.

How do we make sure Dashboards are not misleading?

Of course, Microsoft continuously tries to update and improve Power BI, but some updates can have unwanted effects. We have been using IBCS standards for years as our Power BI report guidelines, and we also believe that if these standards are applied properly, you’ll prevent unintended consequences. To test if our reports comply with these standards, we use ZebraBI‘s IBCS-proof plug-in while building dashboards in PowerBI. Want to learn more about how IBCS standards can help your organization?

Learn more about IBCS reporting

Fill out this form below and find out the basics of IBCS reporting in a quick 20-minute webinar!

You can now watch the webinar!

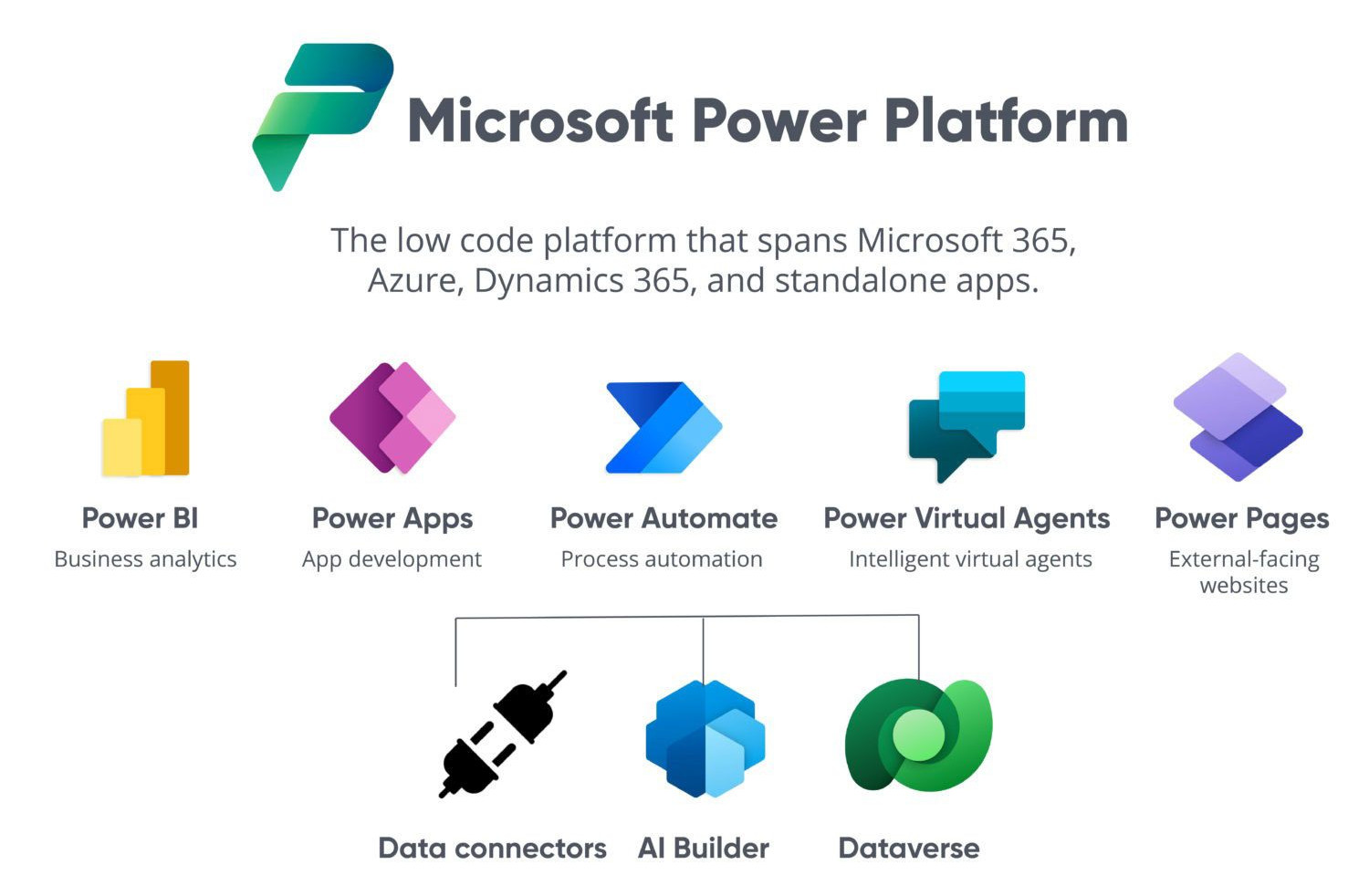

The Power of Microsofts Low Code platform

As the low-code platform that integrates with Microsoft 365, Azure, and Dynamics 365, the Microsoft Power Platform is perfect for professionals who want to develop apps but lack the necessary programming knowledge. In addition, because of its integration with other Microsoft platforms, the Power Platform is perfect for companies that use Microsoft tools. But what are the different apps? And why is using the Power Platform so useful? What's so convenient about Low Code? In this blog, we try to answer all these questions.

What are power apps?

To put it in Microsoft’s words: “Power Apps is a suite of apps, services, and connectors, as well as a data platform, that provides a rapid development environment to build custom apps for your business needs.”

Power Apps enables you (an accountant, engineer, chef, CEO) to build applications that solve your problems regardless of their size.

What is low-code?

Low-code is a way to build applications quickly in a “drag and drop” environment. Low-code enables anyone with a desire to build apps quickly and create something without having to understand coding languages like Java or C++.

On top of that, Low-code also enables you to connect to a variety of back-ends or third party services without having to manually connect to an API or other complex connection tools.

What can low-code offer me?

- Automation

- Re-usability

- Scalability

- Rapid development

- Cross-platform applications

What are the low-code apps within the Microsoft Stack?

Power Apps

The bread and butter of the low-code Power Platform. It enables you to create applications that display data and allow you to interact with your data directly. Power Apps enables you to build applications for both Mobile and web by using a drag and drop interface.

Power Automate

If you want to create automated processes running in the background, this is how you do it. If you want to send emails after new records are created, while at the same time notifying users, Power Automate does this with just a few clicks.

Power Virtual Agents

One of Microsoft’s newest offerings allows you to create chat bots using a low-code approach. Chat bots can be deployed internally on Teams or on your own website. They enable users or customers to find answers to problems without the need for a human-ran support centre.

Power BI

With Power BI, you get even more out of Business Intelligence. Create stunning visuals that provide deep insights into your data and financials. Use forecasting to better predict changes in upcoming time periods and adjust your business planning accordingly.

Power Platform use cases

Imagine you’re an accountant that has to modify data in excel based on the parameters that someone emails to you on a daily basis. Based on these emails, you want to have an overview of all of your data to be able to show to management during your monthly meetings and, on top of this, you want to be able to modify something within the data on a last minute basis if needed. The Power Platform and it’s low code tools can help you automate a large part of your workflow. Here is how:

- We’ll use Power Automate to scrape the values from the email and modify the excel sheet.

- Once the sheet is modified, we can create a dashboard to have a full overview using Power BI to present at your monthly meetings.

- And lastly, we use Power Apps to create a small app that you can modify your data from, if case last-minute modifications are needed.

- Lastly, we could create a Virtual Agent to enable colleagues or clients to request copies of documents from a certain case file using a specified password.

Benefits of Low-code

- Save time and money

- No need to hire a full stack developer

- Custom solutions for custom problems

- Easy to implement

Rockfeather & Low-code

Here at Rockfeather, we know that not everyone has the budget for their business ideas to become reality. That’s why we offer both trainings in low-code as well as building services. We empower everyone to become a citizen developer – this enables you to build things in your own time, with your own tools. If you get stuck or need help, you can be sure that Rockfeather will be there to lend a hand.

For the more advanced projects, we use all of the tools above and more to create fully fledged desktop and mobile applications that can be exported and deployed to your environment seamlessly.

More blogs

Webinar: Advanced forecasting with data science

Forecasting with Data Science can help your organization take the next step in data maturity. In this webinar, we’ll show you how to get even more out of your forecasts with AI.

Power Automate or Logic Apps

Power Automate or Logic apps? Two similar automation tools, both on the Microsoft platform with a common look. With many of the same features the differentiation lies in the details.

Looking back: Data & Analytics Line Up 2022

Want to know what’s on sale for dashboarding or data integration solutions? Want to compare data science solutions? Or would you like to see Low Coding platforms in action? This and more was discussed at the Data & Analytics Line Up 2022!

Power Automate or Logic Apps

Power Automate or Logic apps? Two similar automation tools, both on the Microsoft platform with a common look. With many of the same features the differentiation lies in the details.

Comparing features

Most notably, Logic Apps is aimed at more technically proficient users. Power automate is really aimed at citizen developers, however, with less in-depth features and more user-friendly options.

For most companies, Power Automate is included in the Microsoft Office license with the standard connectors. Though, customers interested in premium connectors will need a premium license. Logic Apps is a service that’s pay as you go, meaning that you pay while the app is running.

Below are 3 key differences:

- Power Automate integrates well with the Power Platform, whereas Logic Apps integrates with azure resources

- Logic Apps supports version control

- Power automate supports robotic process automation

To further expand on the differences in licensing between Power Automate and Logic Apps, we summarized some key takeaways below:

- Logic apps: Pay as you go. You only pay when your application is actively running

- Power Automate: Pay per Month. You pay a fixed fee per user per month, and it’s often already included in your Microsoft Office license (E1, E3 and E5)

Important details

Power automate is part of the Microsoft 365 environment and the power platform, and its main aim is to automate tasks and work within the Power Platform. By comparison, Logic Apps is one of the solutions within the Microsoft Azure Integration Services and is thus more commonly used for ETL processes and data integration. Therefore, Logic Apps has good integration within Azure, but lacks this integration with the Power Platform. What’s best for your business simply depends on what type of capability you need and what you want.

For instance, consider the following examples:

- A manager wants to get an email when a certain KPI value is reached, this KPI is calculated and presented in Power BI, depending on circumstances this could both be done by Power Automate or Logic apps

- A finance employee needs to click a few buttons in a legacy application on his desktop once a day. With Power Automate RPA, this can be automated.

- Data needs to be extracted from a system and loaded to a data warehouse, for this, process Logic App provides the best pricing and the most flexibility.

- A monthly survey needs to be sent out, this is best done via Power Automate

- A process must be approved through a button on a dashboard, because of the Power Platform functionality here Power Automate is best

Compare for yourself

Interested about the strengths and weaknesses of both platforms? We have articles that dive deeper into both Logic Apps and Power Automate and all their pros and cons.

AI Agents: Your New Colleague in Data Analysis

This article explains how AI agents like Zebra BI and Microsoft Fabric Data Agent are already helping organizations work with data faster, smarter, and more intuitively.

How Schiphol puts data at the heart of every key-decision

Maarten van den Outenaar, Chief Data Officer at Schiphol Group, shares practical insights on data strategy, employee empowerment, and sustainable technology adoption.

How do you choose the right KPIs, without losing sight of your strategic goals?

KPI expert Bernie Smith introduces a practical, structured approach to avoid the common trap of measuring everything, yet achieving nothing.