Connecting API’s how hard can it be

Spoiler alert: it can be hard...

Building a data-driven solution starts with extracting data from your systems. For many cloud applications, this requires leveraging APIs. In this blog, we will explain the fundamentals of APIs and their challenges and share easy-to-use tools to help you get started and kickstart your data-driven journey.

Fundamentals of APIs

At its core, an API (Application Programming Interface) is a set of rules that allows communication between different software applications. It serves as a bridge, enabling applications to exchange data. An API operates like a messenger; it takes requests, tells a system what you want to do, and then returns the system’s response. To see it in action, try copying and pasting the following link into your browser:

https://official-joke-api.appspot.com/jokes/programming/random

When you hit ‘Enter,’ a request is sent, and based on the API rules, you receive a random programming joke as a response, like this one: [{“type”:”programming”,”setup”:”How much does a hipster weigh?”,”punchline”:”An Instagram.”,”id”:146}] The response is in a JSON (JavaScript Object Notation) format, commonly used by most APIs. This JSON can be formatted to make it more human-readable (There are also comments included to explain the JSON syntax)

[ <-- start table

{ <-- start row

"type": "programming", <-- kolom: value

"setup": "How much does a hipster weigh?",

"punchline": "An Instagram.",

"id": 146

} <-- end row

] <-- end table

The same data In table format:

| type | setup | punchline | id |

|---|---|---|---|

| general | How much does a hipster weigh? | An Instagram. | 146 |

Congratulations! You’ve taken your first steps into the world of APIs. While this example was straightforward, it’s worth noting that not all APIs are as simple.

Challenges working with APIs

Connecting to an API can be a powerful way to access data, yet it introduces a unique set of challenges. Here are some common challenges:

1. Authentication:

Establishing secure access to APIs can be tricky. Many APIs require authentication to ensure only authorized users or applications can access their data. Authentication methods, including API keys, OAuth tokens, or other custom authentication mechanisms, can vary. It can be challenging to understand the specific authentication method and implement it correctly. Incorrect implementation leads to the notorious “401 Unauthorized” error.

2. Pagination:

For performance reasons, APIs often limit the data returned in each response. Therefore, a significant response is split into smaller pages. Building logic to iterate through the pages until all data is retrieved can be tricky. A frequent error, “404 Not Found,” may occur when accessing a nonexistent page.

3. Rate Limiting:

APIs often enforce usage limits within specific timeframes to protect themselves and underlying systems. Surpassing these limits triggers the “429 Too Many Requests” error code as a protective measurement.

4. Documentation:

API documentation serves as your ally during the API integration journey. Within the documentation, you can discover the requests, authentication methods, pagination guidelines, and any limitations the API may have.

While these challenges might seem overwhelming, tools are available to simplify the connection setup and overcome most hurdles.

Tools for extracting data from APIs

Numerous tools can perform the same trick: connecting and extracting data from an API and storing it somewhere safe, often a database. We’ll spotlight the tools commonly utilized at Rockfeather, each with its own unique strengths and possibilities; it’s worth noting that many alternatives exist.

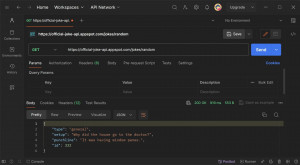

1. Postman

Postman is a versatile API testing tool primarily focused on debugging and creating APIs. It’s user-friendly and has many features to help you get started with a connection to an API. While its strengths lie in exploration and understanding, it’s noteworthy that Postman cannot automatically store data into a database

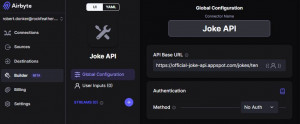

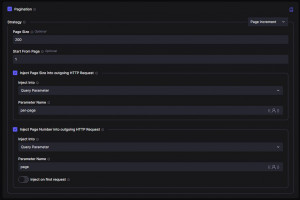

2. Airbyte

Airbyte is an open-source platform for data integration. It offers a no-code interface. With over 350 pre-built data connectors, it provides a simple yet robust starting point that seamlessly scales alongside your growing data demands.

One notable feature of Airbyte is its support for custom connectors. Airbyte has you covered with a unique low-code interface if you need to build a custom connector. This interface comes with built-in features for authentication and pagination—features that set Airbyte apart, considering many other tools lack such functionalities.

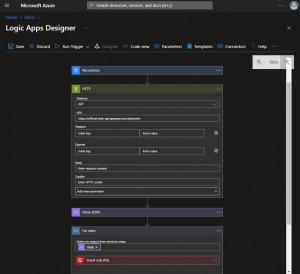

3. Azure Logic Apps

Azure Logic Apps is a cloud-based service for building automated workflows through a user-friendly visual design interface, making it accessible even for those without extensive coding expertise. With 450 pre-built data connectors and an extensive toolkit for custom connectors, Azure Logic Apps stands out as a flexible and universal tool. Its sweet spot is observed when handling more complex APIs with lower data volumes.

Beyond data integration, Azure Logic Apps excels in task automation for sending emails, alerts, surveys, approvals, and system integrations to move data between systems. For example, to synchronize customer information between an ERP and CRM system.

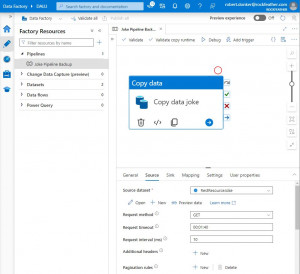

4. Azure Data Factory

Azure Data Factory is a cloud-based service for constructing pipelines. It has a Low code interface with predefined building blocks. Performs best in moving data of high volume from a database or one of its other 80+ connectors. However, it’s less ideal for custom connections.

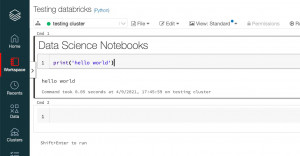

5. Databricks

Databricks is a cloud-based platform for processing big data and machine learning workloads. The platform offers the flexibility of creating and executing notebooks. By writing Python code, you can connect to any data source there is and leverage existing Python packages. However, it’s important to note that Databricks, while powerful, demands a deeper coding knowledge to unlock its expansive platform capabilities fully.

Conclusion

In conclusion, these tools offer diverse approaches to extracting data from APIs, accommodating various preferences, skill levels, business requirements, and data sources. It’s worth noting that a combination of tools may prove more effective for your specific needs. Azure Logic Apps and Azure Data Factory are a common and powerful combination often utilized.

Starting your data journey and connecting to APIs is challenging, especially for beginners. Despite the hurdles, there are tools to assist you. The key is to take that first step.

Want to learn more about the tools we use at Rockfeather? Join us for the Data & Automation Line Up on May 16th. Let’s simplify the process and explore the world of data together.

This might also be interesting for you!

Data-driven insights with dashboards at ADO Den Haag

As a football club, ADO Den Haag is known for its green and yellow colours and the characteristic stork. However, what many people do not know is that behind the scenes ADO Den Haag is undergoing a true data transformation. The club wants to gain more insight into its performance through data, so that it can better anticipate and steer. The first step is the automation of manual overviews and the creation of dashboards in Power BI.

Data-driven planning at ProRail

By 2030, ProRail aims to have 30% more capacity for running trains. That demands a lot from the organization and the management. Data-driven insights are therefore indispensable. Dashboards in Microsoft Power BI provide real-time information about the performance, trends and consequences for the planning of track works. This improves process-oriented and data-driven decision-making so that ProRail can fulfil its strong growth ambition.